Key Steps to Operationalize AI Governance

- Choose the Right Framework

- Design Your Living AI Policy

- Define your Governance Roles and Structure

- Mapp your model inventory

- Control Third-Party AI Risks

- Manage Risks and impact

In a 2024 report, the Australian Securities & Investments Commission (ASIC) analysed 624 AI use cases across 23 regulated financial institutions and the findings are deeply concerning. Only 52% of the entities had policies referencing fairness, inclusivity or accessibility in the use of AI. None had implemented specific mechanisms to ensure contestability — clear processes for consumers to understand, challenge, or appeal automated decisions. Even more troubling, in 30% of the cases reviewed, the AI models had been developed by third parties, and some organisations couldn’t even identify the algorithmic techniques behind them.

These are just some examples of what falls under the scope of AI governance—implementing policies, processes, practices, and frameworks that guide the ethical and responsible development and use of AI.As AI becomes more deeply integrated into organisations and governments, the risks of misuse grow more evident. This reinforces the need for a well-defined and strategic approach to governance.

That’s why, in collaboration with Parker & Lawrence Research, a leading market research firm specialising in AI, risk, and compliance, we’re exploring how to operationalise AI governance —drawing on our combined research and client engagements to make AI Governance practical.

Missed our last post? Start here: What is AI Governance? And why is it Dominating the Enterprise AI Agenda?

Key Steps to Operationalize AI Governance

1. Identify Your Obligations

The first step in operationalizing AI governance is to map your legal and market obligations by asking a critical question: Is my organisation required to comply with specific AI standards or regulations?

AI compliance generally falls into two categories: regulations, which are must-haves, and voluntary standards, which are nice-to-haves.

Must-have regulations are legally binding, and non-compliance can lead to serious legal and financial consequences. These regulations set the foundation for responsible AI use and require organizations to implement formal governance structures and documentation. Key examples include:

- EU AI Act – Establishes a risk-based approach to regulating AI systems, with strict requirements for high-risk applications.

- UK Data Protection Act – Enforces data privacy standards, including transparency, accountability, and fairness in the use of AI technologies.

On the other hand, the nice-to-have standards are voluntary guidelines and frameworks that serve as industry best practices. While not legally enforceable, they play a critical role in promoting ethical AI development and strengthening stakeholder trust. In many cases, they become market-driven requirements, as large clients may demand adherence as a condition for collaboration, or organisations may adopt them to enhance efficiency and resource management.Examples include:

- OECD AI Principles – Encourage AI that is innovative, trustworthy, and respects human rights and democratic values.

- ISO/IEC 42001:2023 – A globally recognized standard for AI management systems, offering structured guidance on implementing responsible AI governance.

When mapping your obligations and choosing an AI governance framework, it ultimately comes down to asking: What is the purpose of integrating AI governance in our organisation? Is it to meet legal obligations, gain competitive advantage, strengthen brand reputation, or improve operational control? Clarity here will determine which combination of regulations and voluntary standards you should adopt.

2. Design Your Living AI Policy

Once your organisation has identified which framework it must comply with, the next step is to operationalise it through a clear and actionable AI policy. An AI policy is a formalised set of principles, rules, and procedures that guide the responsible development, deployment, and use of AI within an organisation. It is important not to adopt a static document, but rather a dynamic and editable framework. One example is the AI Policy Template developed by the Responsible AI Institute (RAI Institute). With 14 detailed sections, it covers essential domains like governance, procurement, workforce management, compliance, documentation, and AI lifecycle oversight.

3. Define your Governance Roles and Structure

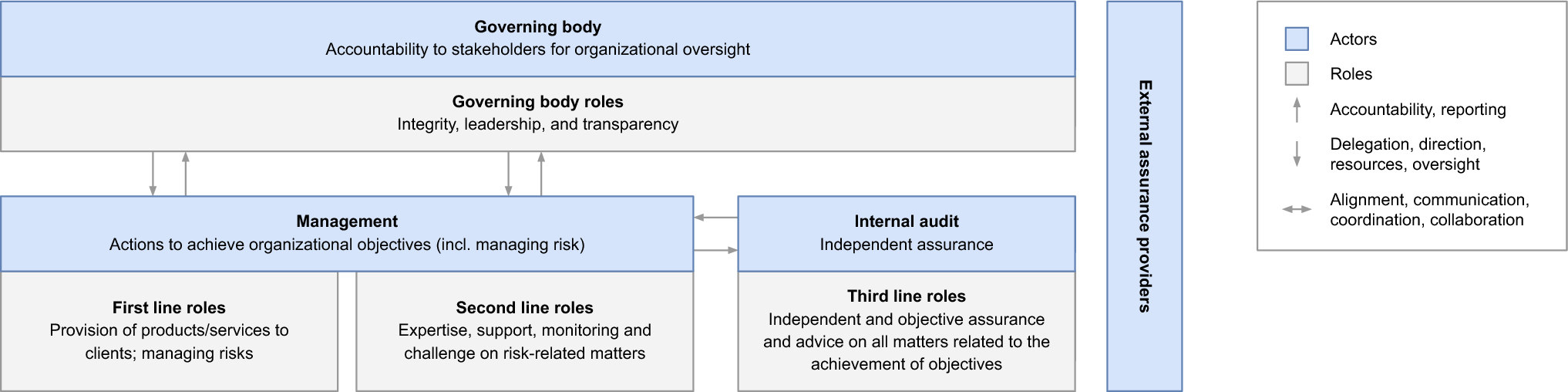

One of the first practical steps in bringing such a policy to life is the creation of a dedicated governance structure—one that clearly defines responsibilities and ensures both accountability and oversight. The Three Lines of Defense (3LoD) model provides a solid and widely adopted foundation for doing just that.

At the top sits the governing body, typically the Board of Directors, ultimately accountable to stakeholders. Its role is to uphold organisational integrity, align strategy, and ensure compliance with ethical and regulatory standards. To exercise effective oversight, the board must rely on independent information sources—not only from executives but also from internal audit or ethics committees that offer an unfiltered view of risk.

Beneath the board, management executes strategy and oversees risk across two lines. The first line includes operational teams—such as AI research and product development—who manage risk directly by building systems responsibly and ensuring compliance. The second line—risk, legal, compliance, and ethics—supports them with expertise, policy development, performance monitoring, and by operationalizing governance principles.

The third line, internal audit, offers independent assurance to the board, assessing how well risks are identified and controlled. In AI organisations, this requires audit functions capable of addressing AI-specific risks—like model misuse, fairness violations, or systemic impact—beyond traditional financial or compliance concerns.

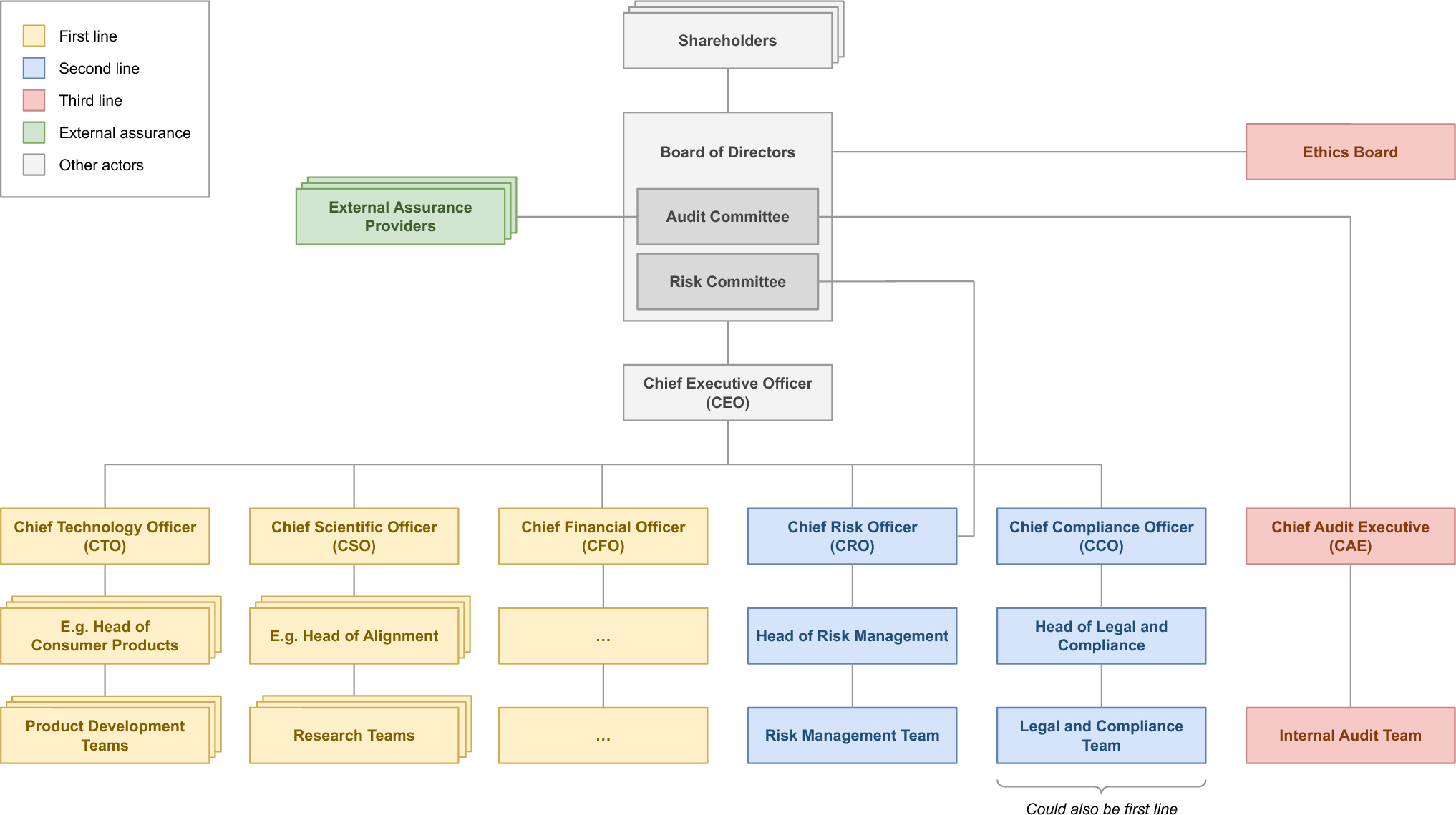

The chart below shows a sample organisational structure of an AI company, with equivalent responsibilities mapped across the three lines of defence.

4. Map your model inventory

Effective AI governance requires full visibility into all AI systems used across your organisation. This starts with building and maintaining a centralised model inventory that lists all AI systems—whether developed, acquired, or resold.

The inventory should include key metadata: types of data used (especially if it involves personal data), model type, algorithm, and deployment context. This visibility is essential for managing risks, supporting audits, and ensuring continuous improvement.

This inventory must be actively maintained, with regular attestations from model owners and integration into broader risk and compliance workflows. Organisations can start with simple tools like spreadsheets, but enterprise-scale or high-risk environments benefit from dedicated platforms that automate discovery, metadata capture, and monitoring.

A well-maintained model inventory reduces blind spots, strengthens audit readiness, and supports risk management throughout the AI lifecycle.

5. Risks Management

Effective risk management is a cornerstone of responsible AI. Organisations must address both internally developed AI systems and externally sourced AI components, ensuring that risks are identified, evaluated, and mitigated across the entire AI lifecycle.

5.a) Internally Developed AI

Organisations must implement structured, ongoing processes to identify, analyse, evaluate, and monitor risks throughout the AI lifecycle—from design and development to deployment, operation, and decommissioning. Mitigation controls should match the complexity and potential impact of each use case. For example, an internal chatbot needs less stringent controls than a credit scoring system.

Risk management typically involves two main phases:

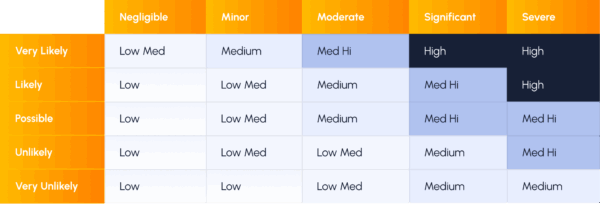

- Risk Analysis: This phase involves identifying the assets, data, or operations at risk and estimating the likelihood and consequences of potential adverse events. A common tool is the risk matrix (see below), which helps prioritise risk treatment based on severity and probability.

- Risk Treatment: Once risks are identified and analyzed, this phase focuses on designing and implementing strategies to reduce those risks to an acceptable or “residual” level. These strategies may include technical safeguards, procedural controls, and contingency plans to prevent harm, respond to emergencies, and maintain business continuity. Organisations must assign clear responsibility for each mitigation action and ensure all relevant stakeholders understand their roles and obligations.

Recognising that eliminating risk entirely is not feasible, organisations must aim to reduce it to levels that are both justifiable and manageable, while ensuring transparency and accountability in their risk governance. The threshold for acceptable risk should be formally defined and approved by senior leadership, as these are not merely technical or legal exercises but strategic decisions reflecting the organisation’s values and risk aversion. Senior leadership must also ensure that the residual risk—that is, the level of risk that remains after all mitigation measures have been implemented—is consistently evaluated and kept within the boundaries of what the organisation has defined as acceptable. If residual risk exceeds the threshold, organisations must apply additional controls or reconsider the AI system’s design.

In addition to technical risks, they must assess the broader societal and ethical impacts of their AI systems—considering potential effects on individuals, demographic groups, and society at large. These assessments must be sensitive to the specific social, cultural, and legal contexts in which the AI systems operate.

The evaluation framework should address questions such as:

- Could the system lead to biased outcomes or discriminatory practices?

- Are there risks of infringing on privacy or fundamental rights?

- What long-term societal effects could emerge from a widespread use of the AI system?

These considerations ensure that organizations not only manage technical risk but also uphold human-centric values throughout the AI lifecycle.

5. b) Third-Party AI

As organisations increasingly rely on external AI components within their AI systems, third-party management becomes a critical governance challenge. Conducting thorough vendor risk assessments is essential to ensure external partners comply with internal policies and regulatory requirements.

Before onboarding any generative AI vendor, it’s essential to assess risk through structured due diligence. This includes examining data sources, model transparency, fairness, and security practices. A highly recommended tool for this is the FS-ISAC Generative AI Vendor Risk Assessment, which includes three core components: an Internal Relevance Check to determine business needs and alignment, a Vendor Risk Evaluation to collect technical details on data handling and controls, and a Final Report that consolidates findings for procurement, legal, and compliance teams. Organisations should also include AI-specific contractual clauses, assign clear internal accountability for third-party AI oversight, and establish a clear incident response process in case of harm, bias, breaches, or failures.

In summary, effective AI governance isn’t about ticking regulatory boxes or drafting static policies—it’s about building a proactive, dynamic framework that aligns every AI-related decision with your organisation’s legal obligations, strategic goals and the evolving societal expectations.